'vibecoded' saas are a privacy nightmare

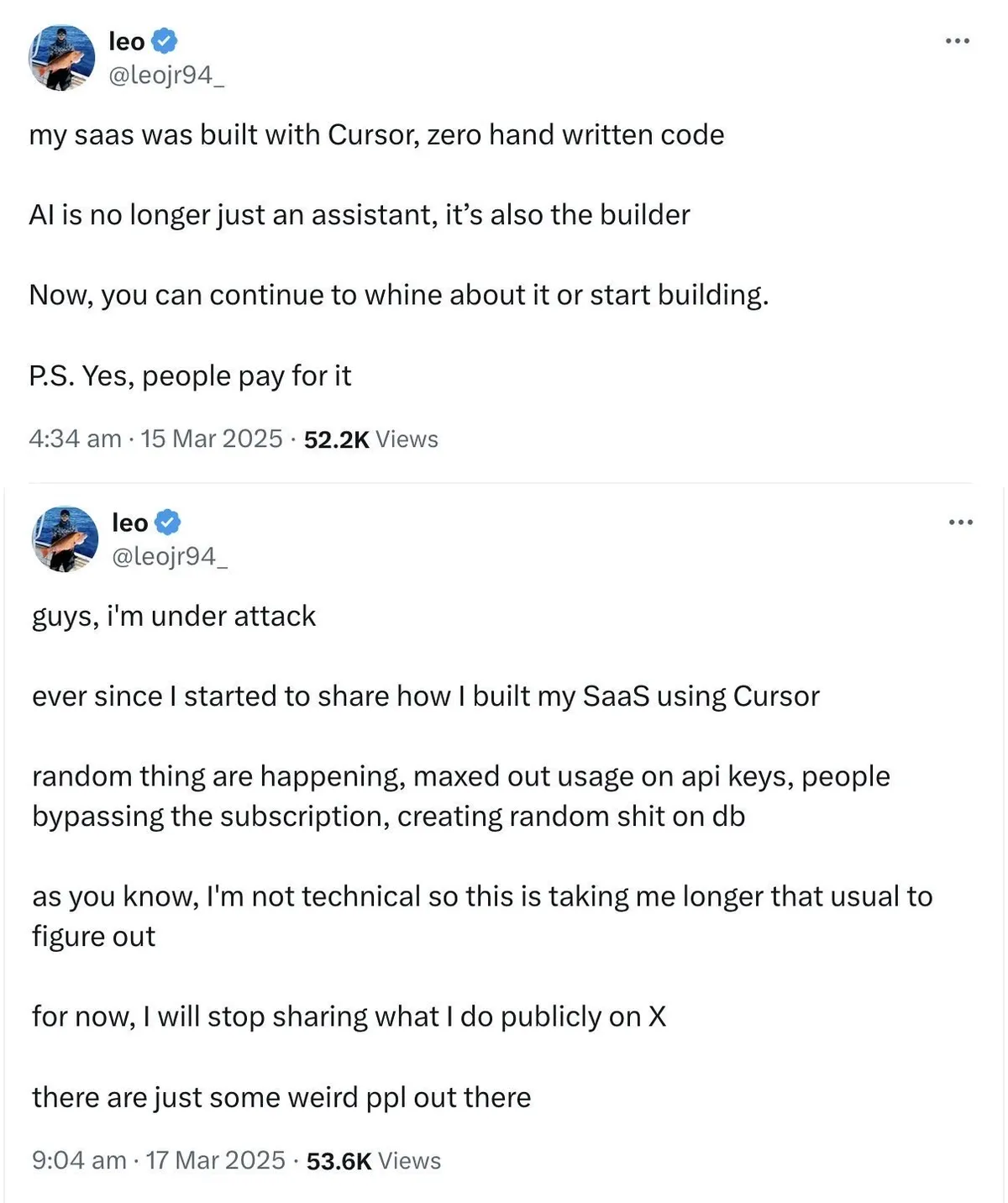

As the term and concept of 'vibecoding' 1 is taking off more, I watch on in horror as people publicly admit stuff like this:

This isn't about that person or product specifically, it's just an example for what we're about to see more of: quickly generated SaaS2 with no thought spent on security, no experience to draw from and no willingness to invest in that aspect.

Because look what else they, and I am sure many others like them, are bragging about:

It's scary to me. These are the people we trust with our personal data, whose apps we download, their websites where we make accounts. Our names, email addresses, phone numbers, birthdays and depending on the product, it could be connected to social media accounts, could be health data, location data and more. And that's for you as a customer - what about if you use their services for your own users and customers, or it promises to do something to third parties? In this case, their company 'EnrichLead' promises to turn anonymous website visitors into leads and get their emails, phone numbers and social profiles. Whew.

They're willing to compromise all that because they perceive SaaS as an opportunity to blow up and get rich with minimal costs and no employees. Peak hustle culture. To them, it's easy to create a business and earn money with these tools and it has been downright romanticized by the other hustlers online, therefore it is desirable. But they don't necessarily have the willingness to take responsibility like a business owner should have. They're promoting rushing, breaking things, taking shortcuts, and it's worrying. It reminds me of Big Tech Has Disrupted the Social Contract by Eddie Huang, where he says:

"Big Tech has sold people on the dream of becoming their own business knowing that most people are incapable. That’s why we’re left to fend for ourselves when there are disputes. [...] These tech companies used lobbies to destroy the enterprise structure of our civilization from taxi cab medallions to hotels to brick-and-mortar businesses. They gutted every industry of jobs held by individuals, they robbed us of our protections, and left us to fend for ourselves. When people and corporations told us that they would disrupt industry, did any of us ask what would happen after that disruption? Would anyone actually take possession and be held responsible?"

That's more about the big companies like Uber creating the Gig economy, but I feel like it applies to smaller fish and the general phenomenon of AI facilitating easy creation of digital platforms and services for everyone. In theory, I am all for it - I want to see people create their own Instagram, their own Snapchat, their own Jellyfin or whatever for their friends and family and not rely on Big Tech or unknown strangers to do it for them. I want them to have the resources to do that. But in a proper way that ensures a basic amount of safety for the personal data of people, and even more so if it is done as a proper company to earn money with it!

It's easy to think your product is just an amalgamation of other companies' services, and they must be secure because everyone uses it, and if not, not your problem. Like this person mentions, it's all just Vercel, Cursor, Firebase etc. right? That doesn't mean the security is not in your hands, or that there is nothing in your code and setup that leaves all your users vulnerable. Firebase is notorious for often being set up wrong and was exploited a lot already; there's even the FirebaseExploiter and Pyrebase helping anyone who's dedicated enough.

There will always be "weird people", as the original poster said. People have always tried to hack and experiment, for fun, for reassurance because they themselves are a user, or maliciously. This isn't new. This is the bread and butter of companies and people offering digital services. This naïveté is dangerous, and it plays not only with people's privacy, but their livelihoods - the average amount of data these things hold are usually enough to try the passwords elsewhere, answer security questions and reset passwords, and gain further entry after the email address is taken a hold of. SIM card hijacking can still be an issue and enough information is in these accounts to make social engineering easy. You can seriously ruin someone's day with this, if not significantly harm their life. You can give the responsibility of payment info to Stripe all you want - it's not saving you if you open up all other attack vectors on your customer that still gives attackers access to payment accounts.

It's not something people should take lightly because making money is cool and you think it's your turn to have a profitable SaaS with minimal effort because you're obsessed with supposed passive income with the least amount of costs to enable it. What are people paying you for?

This is a general issue, but to pick a more localized issue within it: What about EU citizens and their personal identifiable data (PII)? If you process EU citizens' data as a company, and not as a natural person for familial or private purposes, you need to comply, even when you are a small company. How are you thinking to comply with the general principles of integrity and confidentiality3 if you vibecode recklessly, leaving preventable and fixable vulnerabilities and attack vectors because you're not interested in learning it properly and creating things the hard way? Do you trust AI to whip up or automatically include Privacy Policies as well without double checking?

You don't get to skimp out on people's safety and brag how much money and time you saved. This isn't playing with toys, you have to get real about what you're processing and that your customers aren't money-generating numbers only, they're people. These Slop Enthusiasts are putting up professional fronts and want to be taken seriously as service providers while it looks like a mess behind the scenes. I fear in the near future, we'll have tons and tons of data breaches of these slop SaaS who posed as reputable companies.

Reply via email

Published 20 Mar, 2025

"Vibe coding is an AI-dependent programming technique where a person describes a problem in a few sentences as a prompt to a large language model (LLM) tuned for coding. The LLM generates software, shifting the programmer’s role from manual coding to guiding, testing, and refining the AI-generated source code.[1][2][3] Vibe coding is claimed by its advocates to allow even amateur programmers to produce software without the extensive training and skills previously required for software engineering." Wiki↩

"Software as a service (SaaS) is a cloud computing service model where the provider offers use of application software to a client and manages all needed physical and software resources." Wiki↩

"Personal data shall be processed in a manner that ensures appropriate security of the personal data, including protection against unauthorised or unlawful processing and against accidental loss, destruction or damage, using appropriate technical or organisational measures." Art. 5 lit. f GDPR↩